Who needs muscles when you have brains?

Have you ever tried not to hear or read anything about AI for a day? In today’s world, that’s nearly impossible. Artificial intelligence (AI) has become ubiquitous, with edge AI playing a central role in embedded computing.

Edge AI refers to the execution of AI algorithms directly at the data source, such as in a device or machine, rather than in a remote data center. This approach offers clear advantages: lower latencies and enhanced information security through local data processing. While AI is often associated with large server farms, it’s crucial to distinguish between training models and applying them through inference.

Not all AI is the same: the difference between training and inference

Training an AI involves creating and refining models using large datasets. This process is computationally intensive and requires powerful hardware, often entire data centers. This is where inference comes into play. In AI, inference refers to the process of using a trained model to make predictions or decisions based on new, previously unknown data. During training on a large dataset, the model learns to recognize patterns and convert inputs into specific outputs. Once trained, the model can draw conclusions from new data, often using significantly less powerful hardware. This makes inference suitable for low-power platforms such as SMARC modules.

OpenVINO™ and the importance of INT8

Intel’s OpenVINO toolkit enhances inference efficiency. OpenVINO, which stands for “Open Visual Inference and Neural Network Optimization”, is a suite of tools for optimizing and deploying deep learning models on Intel hardware. It enables developers to use pre-trained models, customize them for specific applications, and optimize these models to significantly boost inference performance.

When working with OpenVINO, developers will sooner or later encounter various data types, as the toolkit supports multiple formats. Two examples are INT8 and FP32, which differ in accuracy and memory requirements.

| Data Type | Accuracy range | Memory requirements (Byte) |

| INT8 | -128 to 127 | 1 |

| FP32 | -3.4x1038 to 3.4x1038 | 4 |

Why INT8 is so important

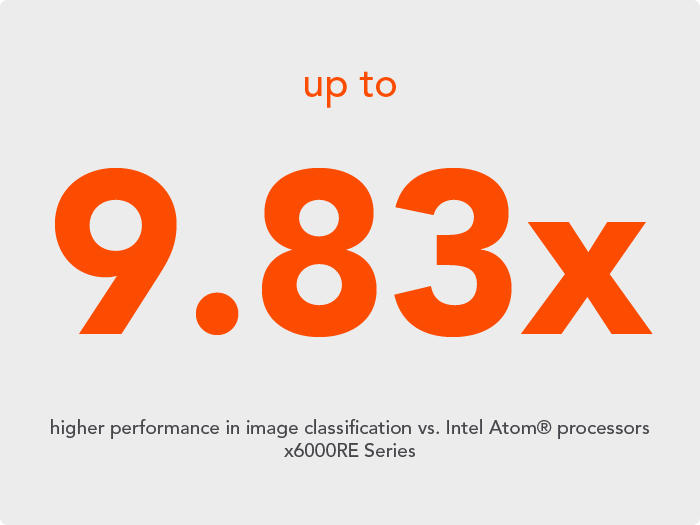

INT8 is particularly noteworthy for providing an optimal balance between computational efficiency and accuracy. Using INT8 reduces memory requirements and accelerates inference, which is especially advantageous on low-power platforms like SMARC modules. Tests have demonstrated that INT8 quantization can deliver significantly higher inference performance without substantially compromising accuracy. Intel has published test results supporting this claim. Despite dating back to 2021, they underscore the relevance of Int8 for edge AI applications today.

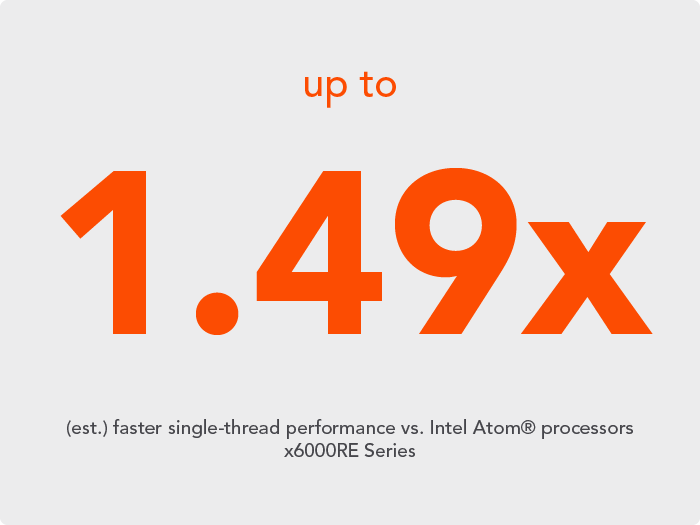

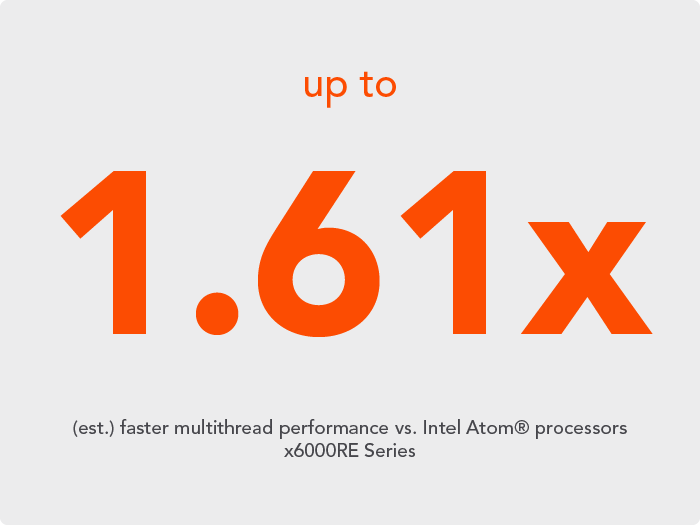

The new Intel Atom® x7000RE Series processors natively support INT8, making them significantly more powerful than the previous x6000RE Series. Intel’s claim of up to 9.83 times better image classification performance highlights the substantial impact of this improvement.

Source: https://www.intel.com/content/www/us/en/products/details/embedded-processors/atom/atomx7000re.html

This native support of INT8 enables optimal use of quantized models, resulting in faster, more efficient inference. It opens up a wide range of applications in various industrial sectors:

-

- Industrial automation: Improved control and monitoring on production lines.

- Motion control: More accurate and faster control of machines and devices.

- Autonomous vehicles and robots: Efficient real-time navigation and decision-making.

- Medical equipment: Increased accuracy and reliability in patient monitoring and laboratory testing.

- Transport management: Optimization of fleet management and traffic monitoring in road and rail systems.

If you are already using SMARC modules, you can easily upgrade to the new x7000RE versions thanks to the module concept.